zfs disk replacement

~ ~ ~ ~ ~

one of the scariest things i've ever seen

~ ~ ~ ~ ~

↯ skip to the steps ↯

i always worry about what's happening to my stuff when i'm out of

town for an extended period and once again i've been proven right.

i came home after traveling for christmas this year

only to find that one of the disks in my big zfs pool had failed:

$ zpool status

pool: nas

state: DEGRADED

status: One or more devices has been removed by the administrator.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Online the device using zpool online' or replace the device with

'zpool replace'.

scan: scrub repaired 0B in 1 days 00:18:14 with 0 errors on Mon Dec 15 00:42:16 2025

config:

NAME STATE READ WRITE CKSUM

nas DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

wwn-0x50000399bc300585 ONLINE 0 0 0

wwn-0x5000cca252c5813c ONLINE 0 0 0

wwn-0x50000399bbc00a3b ONLINE 0 0 0

wwn-0x5000c500c4feab93 ONLINE 0 0 0

wwn-0x5000cca252c58dee ONLINE 0 0 0

wwn-0x5000cca252c4ef8f REMOVED 0 0 0

errors: No known data errors

i like that it blames the administrator

i definitely did NOT remove one or more devices from this pool so

i took a look at the admin portal to see what was wrong.

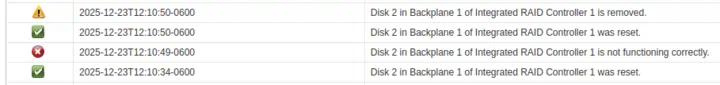

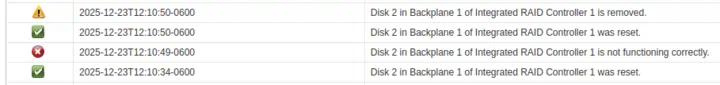

sure enough, there was a hardware fault while i was away:

and when i ventured into the basement to confirm, only disk 2 had a

sad flashing light and a clear click of death

fortunately since it was only one disk out of the

six in the raidz2 array, no data's been lost yet

i ordered another hard drive to replace the one that failed and

now that it's finally arrived i'm writing this blog post

to outline the steps i followed to replace the

failed disk - mostly for my own future reference

disk replacement steps

these are the steps i followed on debian 12

running linux kernel 6.1.0 and zfs 2.1.11

0. remove the failed device

in my case the disk is already showing up as removed but

if zfs is telling you something like UNAVAIL instead,

you'll need to take the failed device offline before replacing it.

you can do this with zpool remove, just use the id of the old disk

to indicate which device you want to remove:

and when i ventured into the basement to confirm, only disk 2 had a

sad flashing light and a clear click of death

fortunately since it was only one disk out of the

six in the raidz2 array, no data's been lost yet

i ordered another hard drive to replace the one that failed and

now that it's finally arrived i'm writing this blog post

to outline the steps i followed to replace the

failed disk - mostly for my own future reference

disk replacement steps

these are the steps i followed on debian 12

running linux kernel 6.1.0 and zfs 2.1.11

0. remove the failed device

in my case the disk is already showing up as removed but

if zfs is telling you something like UNAVAIL instead,

you'll need to take the failed device offline before replacing it.

you can do this with zpool remove, just use the id of the old disk

to indicate which device you want to remove:

$ zpool remove nas $OLD_ID

1. install the new device

this part is easy; your computer will probably be different from

mine but the basic steps are the same. my hardware supports

hot swapping the disks so i just pulled out the old one

and slid in the new one - no restart required.

2. find the new disk

it's a good idea to add disks to a zfs pool by WWN rather than

target name or partition ID since it's unique and won't change

if disks get moved around.

the zfs array i'm working on for example has lived in three

different computers and i haven't had any issues moving it

between machines because of how its set up

on linux you can find your disks listed by id like this:

$ ls /dev/disk/by-id

ata-PLDS_DVD+_-RW_DS-8ABSH_JD6H1PLC0085B51CTA00

ata-ST8000DM004-2CX188_ZCT23WC6

...

wwn-0x5000cca252c58dee

wwn-0x5000cca252c58dee-part1

wwn-0x5000cca252c58dee-part9

you're looking for identifiers starting with wwn. find your new

disk in this list and use its id to add it to your zfs pool.

3. replace the old disk with the new one

using the id you found in step 2 you can now tell zfs

to replace the failed disk with the new one:

$ sudo zpool replace nas $OLD_ID $NEW_ID

at this point when you check the status of the pool it should say

DEGRADED while it uses the rest of the disks in the pool to

calculate what needs to be written to the new disk.

$ zpool status

pool: nas

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat Jan 17 16:05:22 2026

15.2G scanned at 778M/s, 7.69M issued at 394K/s, 39.6T total

0B resilvered, 0.00% done, no estimated completion time

config:

NAME STATE READ WRITE CKSUM

nas DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

wwn-0x50000399bc300585 ONLINE 0 0 0

wwn-0x5000cca252c5813c ONLINE 0 0 0

wwn-0x50000399bbc00a3b ONLINE 0 0 0

wwn-0x5000c500c4feab93 ONLINE 0 0 0

wwn-0x5000cca252c58dee ONLINE 0 0 0

replacing-5 DEGRADED 0 0 0

wwn-0x5000cca252c4ef8f REMOVED 0 0 0

wwn-0x5000c500ebb9e73d ONLINE 0 0 0

errors: No known data errors

then the slow part starts - resilvering the new disk.

this might take days to complete depending on the size of your pool

$ zpool status

pool: nas

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat Jan 17 16:05:22 2026

17.8T scanned at 33.1G/s, 135G issued at 252M/s, 39.6T total

22.5G resilvered, 0.33% done, 1 days 21:36:16 to go

config:

NAME STATE READ WRITE CKSUM

nas DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

wwn-0x50000399bc300585 ONLINE 0 0 0

wwn-0x5000cca252c5813c ONLINE 0 0 0

wwn-0x50000399bbc00a3b ONLINE 0 0 0

wwn-0x5000c500c4feab93 ONLINE 0 0 0

wwn-0x5000cca252c58dee ONLINE 0 0 0

replacing-5 DEGRADED 0 0 0

wwn-0x5000cca252c4ef8f REMOVED 0 0 0

wwn-0x5000c500ebb9e73d ONLINE 0 0 0 (resilvering)

errors: No known data errors

this can be a dangerous time for a zpool since it's very

read- and write-heavy for all devices. keep an eye on the

progress in case any other drives fail while adding the new one.

if all goes well then eventually the pool will be back to normal:

zpool status

pool: nas

state: ONLINE

scan: resilvered 6.60T in 16:10:49 with 0 errors on Sun Jan 18 08:16:11 2026

config:

NAME STATE READ WRITE CKSUM

nas ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

wwn-0x50000399bc300585 ONLINE 0 0 0

wwn-0x5000cca252c5813c ONLINE 0 0 0

wwn-0x50000399bbc00a3b ONLINE 0 0 0

wwn-0x5000c500c4feab93 ONLINE 0 0 0

wwn-0x5000cca252c58dee ONLINE 0 0 0

wwn-0x5000c500ebb9e73d ONLINE 0 0 0

errors: No known data errors

my pool is ~25 TB and about 90% full so resilvering

took a little over 16 hours. hopefully i won't have to replace

any more disks any time soon 🤞🏻

the disk that failed was manufactured in 2017 and had about

70,000 power on hours so it was getting pretty old.

even the newest disks in that pool are at around 40,000 hours

so given that i'm also starting to run out of space it might be

time to think about upgrading the whole pool. if i end up

doing that i'm sure i'll write it up here so keep an eye out if

you're interested.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

written by peter beard on 2026-01-18, last updated 2026-01-18

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

and when i ventured into the basement to confirm, only disk 2 had a

sad flashing light and a clear click of death

fortunately since it was only one disk out of the

six in the raidz2 array, no data's been lost yet

i ordered another hard drive to replace the one that failed and

now that it's finally arrived i'm writing this blog post

to outline the steps i followed to replace the

failed disk - mostly for my own future reference

disk replacement steps

these are the steps i followed on debian 12

running linux kernel 6.1.0 and zfs 2.1.11

0. remove the failed device

in my case the disk is already showing up as removed but

if zfs is telling you something like UNAVAIL instead,

you'll need to take the failed device offline before replacing it.

you can do this with zpool remove, just use the id of the old disk

to indicate which device you want to remove:

and when i ventured into the basement to confirm, only disk 2 had a

sad flashing light and a clear click of death

fortunately since it was only one disk out of the

six in the raidz2 array, no data's been lost yet

i ordered another hard drive to replace the one that failed and

now that it's finally arrived i'm writing this blog post

to outline the steps i followed to replace the

failed disk - mostly for my own future reference

disk replacement steps

these are the steps i followed on debian 12

running linux kernel 6.1.0 and zfs 2.1.11

0. remove the failed device

in my case the disk is already showing up as removed but

if zfs is telling you something like UNAVAIL instead,

you'll need to take the failed device offline before replacing it.

you can do this with zpool remove, just use the id of the old disk

to indicate which device you want to remove: